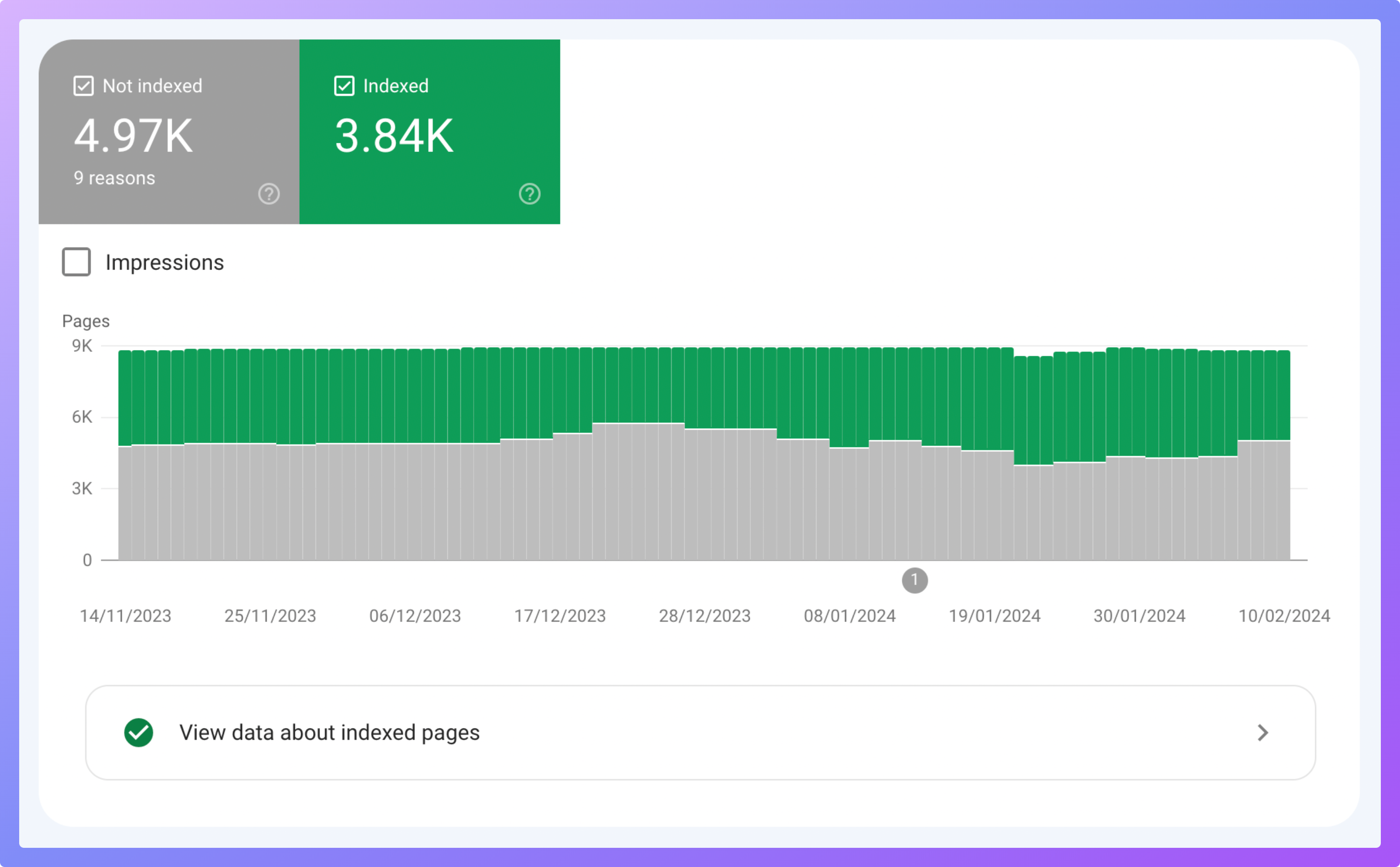

Managing a website with numerous pages presents a common issue: not all pages are indexed by Google. A glance at the Google Search Console's pages tab often reveals this discrepancy:

Notably, some pages remain unindexed due to two main reasons:

To address this, you have a few strategies at your disposal:

This method can be slow, and there's no guarantee all pages will be indexed. It's impractical for websites with extensive content.

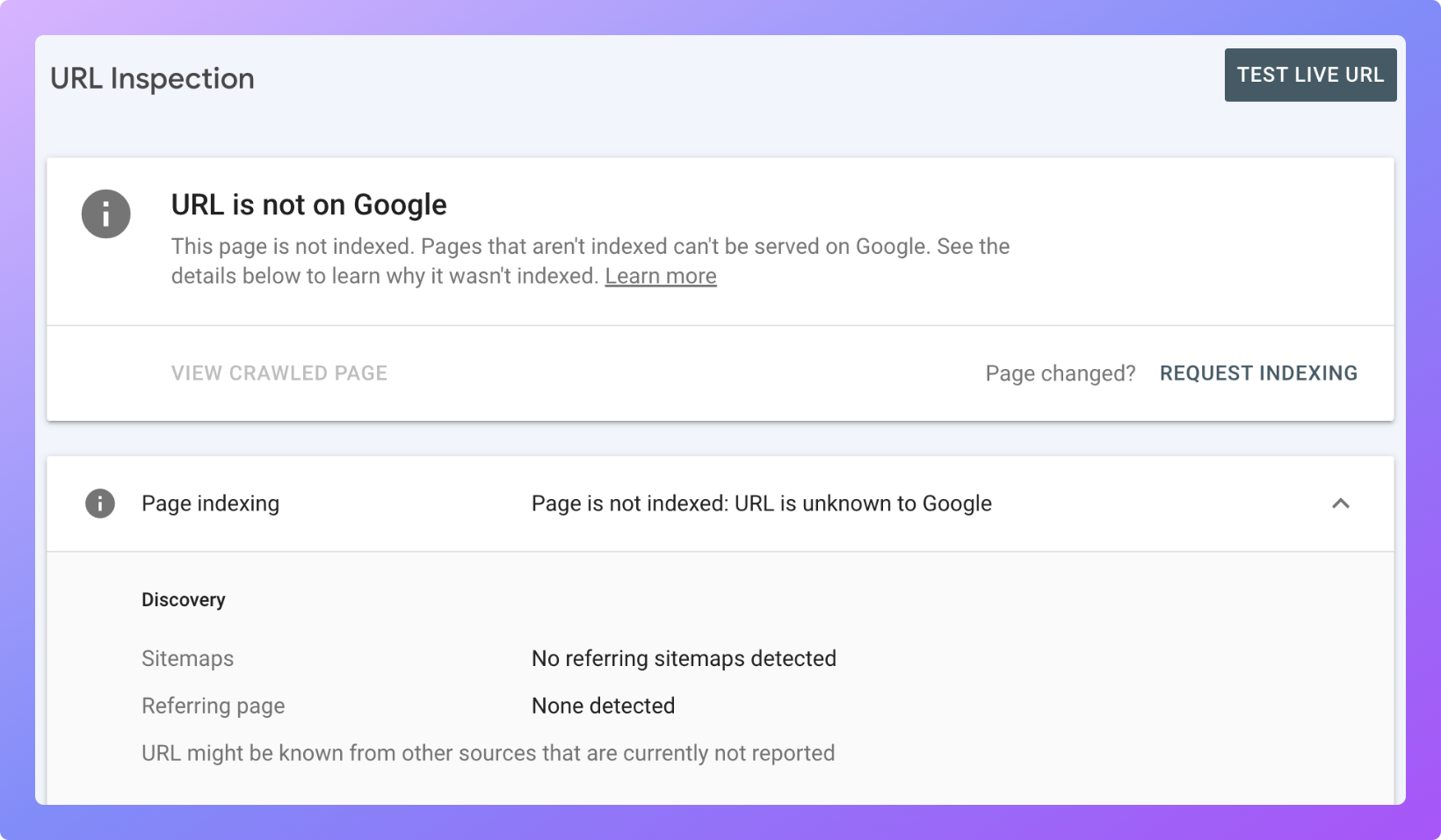

While effective, this approach is labor-intensive, especially for large sites. It involves using the GSC's URL inspection tool for individual page submissions.

This is the most efficient solution for quickly indexing a large volume of pages. This article focuses on how to utilize this approach.

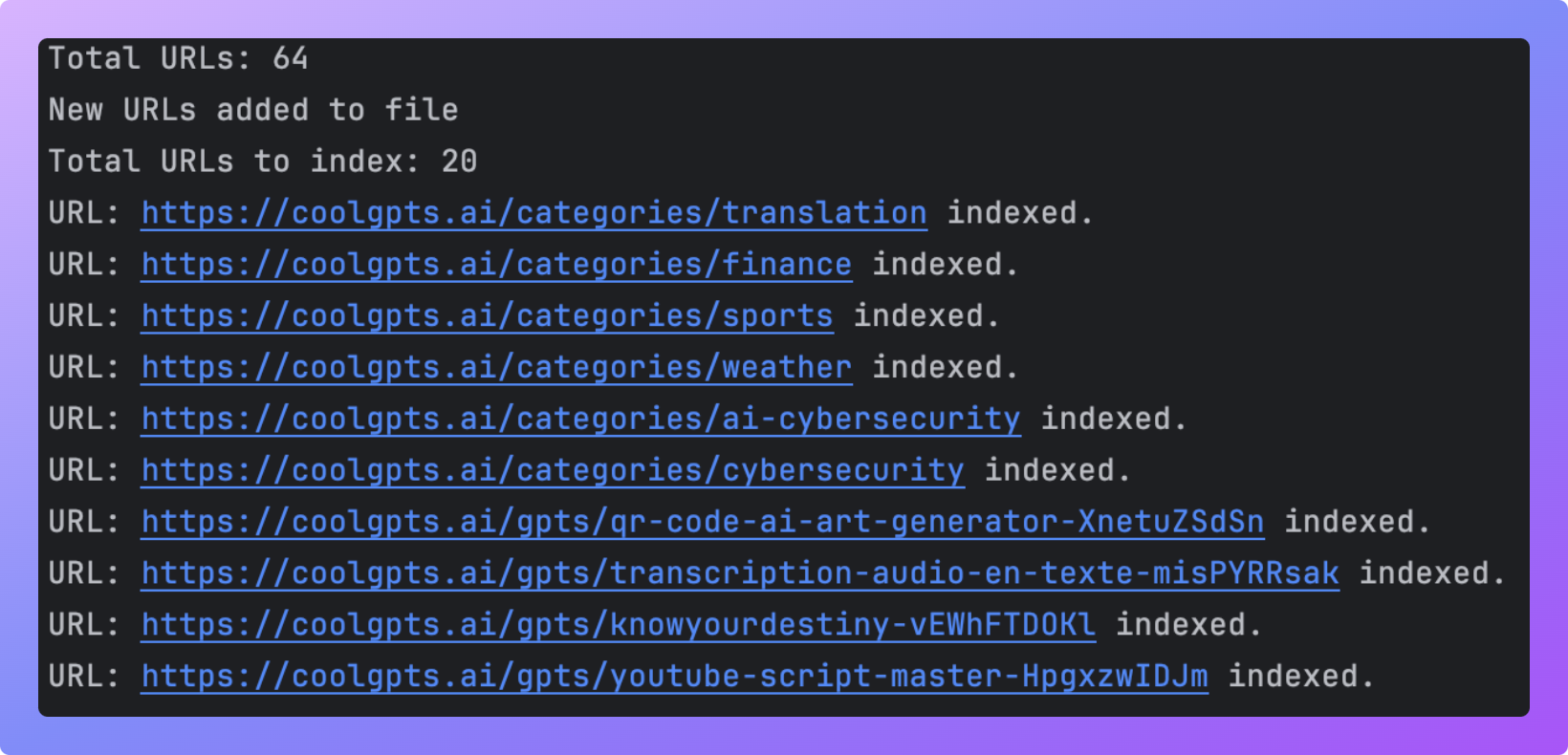

Google's Indexing API enables you to notify Google immediately upon creating or updating content, ensuring prompt indexing. We've developed a freely available, open-source Python script that automates page indexing using this API, available in our GitHub Repository.

pip install -r requirements.txt

Navigate to the Google Cloud Console to create a project.

Create a service account following these instructions, and download the credentials.json file to your project directory. This account enables indexing of up to 200 pages daily, subject to Google's API constraints.

Add the service account with the Owner role as described here.

index.py:website_sitemap = 'https://example.com/sitemap.xml'

Adjust this to point to your sitemap. The script processes all pages listed in the sitemap, including those within any sitemap index files.

python index.py

The script can index up to 200 pages per execution, tracking submissions in the urls.json file to avoid duplication. It's designed for daily use to maximize the 200-page daily quota.

This method offers a streamlined approach to ensure your website's content is efficiently indexed by Google.